Part of why AI chatbots are so dreadful is we know the corporation / agency doesn’t care whether our problem gets resolved or not.

Please note: Of Two Minds subscription rates are going up this Friday 3/1/24 from $5/month or $50/year to $7/month or $70/year, so subscribe by Thursday if you want to lock in current rates. If you’re considering subscribing, please read the comment from Substack subscriber Kelly at the end of the post.

Click-bait-scary forecasts of hundreds of millions of jobs lost to AI are as ubiquitous as incompetent AI chatbots. Richard Bonugli and I recently took a more nuanced look at AI Job Challenges and Trends, with the goal not of throwing the baby out with the bathwater (i.e. concluding all AI is junk science) but of focusing on AI’s limits in real-world problem-solving.

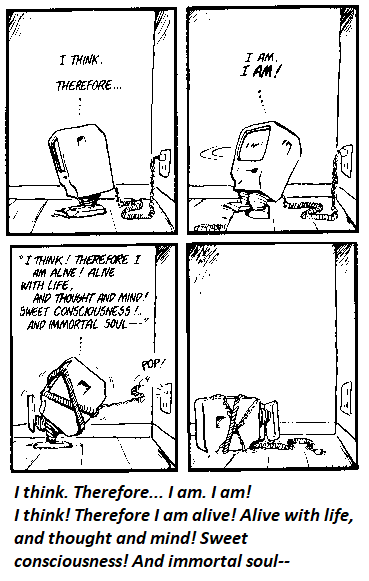

We can summarize these limits in one question: who error-corrects AI? the intrinsic problem here is data harvesting machine learning–the essence of Large Language Model (LLM) AI and other machine learning approaches–is the illusion of precision: the model selects the correct diagnosis 95% of the time, but who’s going to error-correct the vital 5%?

Consider being a patient with cancer that receives an all-clear/no-cancer diagnosis from an AI processed scan. In other words, consider the consequences of the AI tool being wrong 5% of the time. In the case of cancer diagnoses, a wrong diagnosis can be a death sentence, or it can open a pathway to unnecessary treatments and surgeries.

The illusion of precision leads to fatal assumptions: if the AI error rate is “only” 5%, but the majority of the 5% errors are the most consequential, then the entire idea of basing accuracy on the percentage of correct / incorrect hits is grievously flawed. In effect, AI might be accurate on the 95% of cases with limited consequences and mostly inaccurate on the cases that really matter, but this reality is lost in the claim that it’s 95% accurate.

Data harvesting machine learning is useless when problem-solving boils down to individual cases. Consider a modern vehicle, which is essentially a rolling platform of software. Each vehicle has a diagnostic port that the mechanic uses to detect what system / component has failed, but this doesn’t automatically solve 100% of the problems that crop up in complex machines.

Having a model that predicts the likelihood of the source of unidentified mechanical problems is useful in the sense that the model predicts where to start the investigation, but it doesn’t actually identify the problem with this vehicle. That requires a physical presence and experience beyond any model’s guesstimate. Someone has to actually drop the engine to reach the failed control board. That someone performs both the essential tasks in actually repairing the vehicle: error-correction and the physical work of doing the repair.

The physician who reviews the AI scan results brings real-world experience that cannot be codified in data harvesting.

AI is being touted in cases that largely fall into the service sector such as customer service. (As I’ve outlined recently, the real-world results have been abysmal, simply reinforcing the trend of making customers do all the work, i.e. shadow work.) Digital Service Dumpster Fires and Shadow Work.

In the real world of work, AI can’t actually repair the rotted handrailing or install the piping. AI tools may well offer potentially useful guidelines or help get the needed materials onsite logistically, the but actual work in the field is most cost-effectively performed by humans with long experience.

Another intrinsic limit in AI is the high-touch, low-touch divide. A physician with 40 years of experience recently told me that patients report feeling better after being seen by a nurse or doctor, and we can intuit why: they feel better because someone cares about them and their health enough to actually be physically present. Another experienced physician once told me that he’d concluded many of his patients sought an appointment with him just to have someone listen to them.

These are examples of high-touch experiences that cannot be replaced with low-touch robotic voices and printouts. There are many others. Do you want your hair cut by your barber, who has become a friend of sorts, or a robot? Do you recall with fondness a particular dinner because the wait staff was charming and attentive without being overbearing?

Part of why AI chatbots are so dreadful is we know the corporation / agency doesn’t care whether our problem gets resolved or not. Simply put, replacing human interactions with sterile AI interactions fails at the human level. If we grasp this reality, we realize humans cannot be replaced by AI except at the most superficial low-touch level.

In real world situations, AI can’t be said to “understand” problems. It’s good at statistically identifying the most likely subsets of solutions and presenting those possibilities in a form that can be compared to actual results, and assigning a confidence level to each of its predictions. But this doesn’t mean it’s diagnoses or solutions are accurate or that it’s right in the most critical, consequential situations.

What’s interesting is the really hard problem AI is incapable of solving is how to manage the unintended consequences of its runaway expansion in our global socio-economic system. There is more on this in my book

Will You Be Richer or Poorer?: Profit, Power and A.I. in a Traumatized World.

Vision Series: AI Job Challenges and Trends (34:54 min)

Comment from Substack subscriber Kelly:

“I supported your work because of the ultimate purpose of your writing: taking control of and improving our own well-being and security. Plus, you sound a lot like my father (who has been gone for many, many years and I miss him). I feel like your writing is a reflection of what he would be telling me now about how to deal with the days, weeks and years to come. Thank you.”

My recent books:

Disclosure: As an Amazon Associate I earn from qualifying purchases originated via links to Amazon products on this site.

Self-Reliance in the 21st Century print $18,

(Kindle $8.95,

audiobook $13.08 (96 pages, 2022)

Read the first chapter for free (PDF)

The Asian Heroine Who Seduced Me

(Novel) print $10.95,

Kindle $6.95

Read an excerpt for free (PDF)

When You Can’t Go On: Burnout, Reckoning and Renewal

$18 print, $8.95 Kindle ebook;

audiobook

Read the first section for free (PDF)

Global Crisis, National Renewal: A (Revolutionary) Grand Strategy for the United States

(Kindle $9.95, print $24, audiobook)

Read Chapter One for free (PDF).

A Hacker’s Teleology: Sharing the Wealth of Our Shrinking Planet

(Kindle $8.95, print $20,

audiobook $17.46)

Read the first section for free (PDF).

Will You Be Richer or Poorer?: Profit, Power, and AI in a Traumatized World

(Kindle $5, print $10, audiobook)

Read the first section for free (PDF).

The Adventures of the Consulting Philosopher: The Disappearance of Drake (Novel)

$4.95 Kindle, $10.95 print);

read the first chapters

for free (PDF)

Money and Work Unchained $6.95 Kindle, $15 print)

Read the first section for free

Become

a $1/month patron of my work via patreon.com.

Subscribe to my Substack for free

NOTE: Contributions/subscriptions are acknowledged in the order received. Your name and email

remain confidential and will not be given to any other individual, company or agency.

| Thank you, John C. ($100), for your outrageously generous subscription to this site — I am greatly honored by your support and readership. |

Thank you, USA-Sunshine ($5/month), for your marvelously generous subscription to this site — I am greatly honored by your support and readership. |

| Thank you, Tom B. ($50), for your splendidly generous contribution to this site — I am greatly honored by your support and readership. |

Thank you, John O. ($5/month), for your superbly generous subscription to this site — I am greatly honored by your support and readership. |